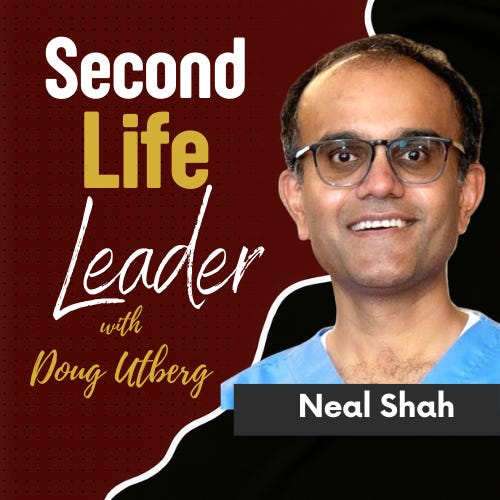

Healthcare innovator Neal Shah joins me to unpack how artificial intelligence is being used against patients—and how it can be used to fight back.

Most conversations about AI in healthcare focus on efficiency, cost savings, or shiny tools. This episode goes deeper. Neal Shah and I examine how insurers have quietly weaponized AI to deny care at scale—and why patients are losing not because they’re wrong, but because the system is asymmetrically stacked against them.

Neal shares how caregiving for his grandfather with dementia and his wife through years of cancer exposed the realities of denial letters, administrative friction, and time-based exhaustion. We explore how claim denials jumped from 1.2% to nearly 20% nationwide, why most patients never appeal, and how insurers exploit the fact that appeals take hours while denials take seconds.

From there, we dig into how AI—trained on successful appeals, billing codes, medical research, and insurer coverage policies—can flip that imbalance. Not by gaming the system, but by restoring access to evidence, speed, and leverage for people who don’t have legal teams or financial backstops.

The conversation widens into elder care, end-of-life costs, administrative bloat, and why healthcare outcomes don’t justify 20% of U.S. GDP. This isn’t an anti-technology episode. It’s a clear-eyed look at incentives, power, and how tools can either centralize control—or return it to individuals.

The lesson isn’t blind optimism about AI. It’s discernment: knowing where technology helps, where regulation lags, and how ordinary people can protect themselves inside systems that weren’t designed for fairness.

TL;DR

Insurers now programmatically deny ~20% of claims—up from 1.2% fifteen years ago

99% of denied patients never appeal, despite high reversal rates

Of those who appeal, ~40% win; with AI support, success jumps to ~73%

Most denials stem from billing errors or weak documentation—not medical necessity

State insurance regulators provide external review boards most patients don’t know exist

AI can restore speed and evidence access—but doesn’t fix broken incentives alone

Healthcare costs are driven by administrative bloat, not clinical care

Elder care is optimized for real estate returns, not human outcomes

The real crisis isn’t technology—it’s confusion, exhaustion, and lack of agency

Memorable Lines

“A denial letter is the shadow of a gun.”

“Insurers deny care in seconds—patients are expected to respond in hours.”

“Most people lose not because they’re wrong, but because they’re tired.”

“AI didn’t break healthcare—it just exposed where power already lived.”

“Care is relational, but the system is designed to prevent relationships.”

Guest

Neal Shah — Healthcare innovator, author, and caregiver advocate

Founder of Counterforce Health and Carriya, focused on patient empowerment, insurance accountability, and improving elder care through technology and workforce redesign.

🔗 https://counterforcehealth.org

🔗 https://www.carriya.com

Why This Matters

Healthcare isn’t just expensive—it’s adversarial.

As AI accelerates denial systems faster than regulation can respond, patients are increasingly left alone inside bureaucracies they don’t understand, don’t control, and can’t afford to fight.

This episode reframes AI not as a threat or a cure-all, but as a leverage tool—one that can either deepen inequality or help ordinary people reclaim agency inside broken systems.

For founders, operators, and executives, this conversation mirrors a broader truth: when systems scale faster than ethics, responsibility shifts to individuals who understand how power actually works.

Stability isn’t restored by nostalgia or outrage.

It’s rebuilt by clarity, tools, and the willingness to confront reality head-on.